A paper on US temperature adjustments was published in Geophysical Research Letters on February 5. Download full text here, (pdf, 1.5MB). The University of York has information about the paper including handy links to data.

The paper examines and offers improvements to adjustments to the continental US surface temperature record. The abstract is easy to read and understand:

Numerous inhomogeneities including station moves, instrument changes, and time of observation changes in the US Historical Climatological Network (USHCN) complicate the assessment of long-term temperature trends. Detection and correction of inhomogeneities in raw temperature records have been undertaken by NOAA and other groups using automated pair-wise neighbour comparison approaches, but these have proven controversial due to the large trend impact of homogenization in the United States. The new US Climate Reference Network (USCRN) provides a homogeneous set of surface temperature observations that can serve as an effective empirical test of adjustments to raw USHCN stations. By comparing nearby pairs of USHCN and USCRN stations, we find that adjustments make both trends and monthly anomalies from USHCN stations much more similar to those of neighbouring USCRN stations for the period 2004-2015 when the networks overlap. These results improve our confidence in the reliability of homogenized surface temperature records.

Slate’s response is straight out of the “I told you so” playbook but they haven’t actually read the paper. First, they say:

A common claim by climate change deniers is that scientists have been “altering” ground-based temperature data to make it look like the Earth is warming.

It certainly is a frequent claim, and there’s a reason for that: because adjustments are frequently made with inadequate explanation. For example, Skeptical Science brazenly admits that GISS Arctic observations are often “extended” up to 1200 km from the thermometers, without explaining how such “readings” might be credible.

It would be the same as measuring the temperature in Invercargill and using it to describe Browns Bay, on Auckland’s North Shore, 1200 km away. In other words, ludicrous. Perhaps GISS’s Arctic extrapolation is reasonable, but until they explain it we cannot know.

But then comes Slate’s alarmist conclusion:

The planet is heating up, and they’re measuring that. That’s what the data are telling us, that’s what the planet is telling us, and as long as our politicians in charge are sticking their fingers in their ears and yelling “LALALALALALA” as loudly as they can, we’ll never get off our oil-soaked butts and get anything done to prevent an environmental catastrophe.

Crisp writing, punchy message. But don’t be fooled—they’ve taken a decade and called it a century. The paper carefully points out their analysis “can only directly examine the period of overlap.” The Climate Reference Network was set up in January 2004, which makes the overlap precisely eleven years and ten months (January 2004 to October 2015). Near a decade; far from a century—yet that’s the minimum required to establish unprecedented warming.

But Slate improbably misconstrues more than this. They can see wild global warming. But here are the temperatures during the comparison period:

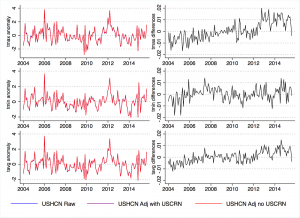

Raw and adjusted CONUS temperature data. Precious little warming – none to worry about. Click to enlarge.

The graph shows no warming during the period of study and of course it says nothing about the previous hundred years. Slate mentions warming, but the paper doesn’t: its conclusion is carefully fixed, as it should be, on the accuracy of the adjustments made by the algorithm they chose:

This work provides an important empirical test of the effectiveness of temperature adjustments similar to Vose et al. [2012], and lends support [to] prior work by Williams et al. [2012] and Venema et al. [2012] that used synthetic datasets to find that NOAA’s pairwise homogenization algorithm is effectively removing localized inhomogeneities in the temperature record without introducing detectable spurious trend biases.

So, when you actually read it, the paper offers no grounds at all for Slate’s fact-free “finding” that:

The planet is heating up, and they’re measuring that. That’s what the data are telling us.

Phooey. This paper is a proper scientific response to a controversial topic and it concludes that the adjustments made over the past 12 years to USHCN records brought them more into line with the new and more reliable USCRN. It says nothing about warming except for this:

However, the net effect of adjustments on the USHCN is quite large, effectively doubling the mean temperature trend over the past century compared to the raw observational data [Menne et al. 2009]. This has resulted in a controversy in the public and political realm over the implications of much of the observed US warming apparently resulting from temperature adjustments.

Which, dear reader, establishes the authors’ solid honesty, since they have not covered up the appearance of deception. Certainly they are more courageous than our own NIWA, who pour scorn on similar assertions about their temperature adjustments, to the extent that, rather than release details of their methodology, they force questioners into court.

Slate draws conclusions that owe more support to their agenda than to the paper they review.

However, it is regrettable that the authors also strain to wrench an unnatural conclusion from their data which perhaps enticed Slate to go over the top. For the paper concludes (emphasis added):

While this analysis can only directly examine the period of overlap, the effectiveness of adjustments during this period is at least suggestive that the PHA [the Pairwise Homogenization Algorithm chosen to adjust the USHCN] will perform well in periods prior to the introduction of the USCRN, though this conclusion is somewhat tempered by the potential changing nature of inhomogeneities over time.

Which expression of hope is nothing more than a declaration of bias. At least they’re embarrassed enough by such naked prejudice to attempt to water it down by admitting some data irregularities change over time. They’re referring to trees increasingly shading the weather station or sheltering it from prevailing winds.

This is an interesting and useful paper which in Slate’s review has been undeservedly recruited for shameful purposes.

Views: 48

>”the PHA [the Pairwise Homogenization Algorithm chosen to adjust the USHCN]”

If only GISS would leave it at that but no, GISS seem intent on making temperature conform to the linear log Temp/CO2 model. Jeff Patterson leads with this in his solar model post at WUWT:

‘Temperature versus CO2’

Greenhouse gas theory predicts a linear relationship between the logarithm of CO2 atmospheric concentration and the resultant temperature anomaly. Figure 1 is a scattergram comparing the Hadcrut4 temperature record to historical CO2 concentrations.

Figure 1a – Hadcrut4 temperature anomaly vs. CO2 concentration (logarithmic x-scale); (b) Same as Figure 1 with Gaussian filtering (r=4) applied to temperature data

https://wattsupwiththat.files.wordpress.com/2016/02/image15.png

Updated with post 2013 data to present:

https://wattsupwiththat.files.wordpress.com/2016/02/fig1.png

At first glance Figure 1a appears to confirm the theoretical log-linear relationship. However if Gaussian filtering is applied to the temperature data to remove the unrelated high frequency variability a different picture emerges.

Figure 1b contradicts the assertion of a direct relationship between CO2 and global temperature. Three regions are apparent where temperatures are flat to falling while CO2 concentrations are rising substantially. Also, a near step-change in temperature occurred while CO2 remained nearly constant at about 310 ppm. The recent global warming hiatus is clearly evident in the flattening of the curve above 380 ppm.

[…]

A much better correlation exists between atmospheric CO2 concentration and the variation in total solar irradiance (TSI).

http://wattsupwiththat.com/2016/02/08/a-tsi-driven-solar-climate-model/comment-page-1/#comment-2141847

# # #

Futility by GISS in the long run as evidenced above once filtering is applied to HadCRUT4 and does nothing to reconcile GMST with CO2-forced GSMs which are becoming increasingly out of whack..

And temperature is not the IPCC’s primary climate change criteria anyway i.e. they are tweaking a secondary effect but that has no bearing on the primary criteria. They have to win the primary argument first, they haven’t, and even in the secondary argument solar models like Jeff Patterson’s are crashing the temperature party.

BTW RT, in that solar model thread I posted the argument:

Attracted Nick Stokes and Ferdinand Engelbeen.

>”Futility by GISS in the long run as evidenced above once filtering is applied to HadCRUT4 and does nothing to reconcile GMST with CO2-forced GSMs which are becoming increasingly out of whack.”

Physics Club: Gavin Schmidt, NASA Goddard Institute for Space Studies, “The Physics of Climate Modeling”

Speaker/Performer: Gavin Schmidt, NASA Goddard Institute for Space Studies

Description: Climate is a large-scale phenomenon that emerges from complicated interactions among small-scale physical systems. Yet despite the phenomenon’s complexity, climate models have demonstrated some impressive successes. Climate projections made with sophisticated computer codes inform the world’s policymakers about the potential dangers of anthropogenic interference with Earth’s climate system, attribute historical changes to various drivers and test hypotheses for climate changes in the past. But what physics goes into the models, how are the models evaluated, and how reliable are they?

http://calendar.yale.edu/cal/physics/week/20160215/All/CAL-2c9cb3cc-520310a5-0152-13870275-00003b72bedework@yale.edu/

# # #

>”climate models have demonstrated some impressive successes”

Tom Nelson’s graphed response: https://twitter.com/tan123/status/697460397985509376

>”Climate projections made with sophisticated computer codes inform the world’s policymakers about the potential dangers of anthropogenic interference with Earth’s climate system……….But what physics goes into the models, how are the models evaluated, and how reliable are they?”

It would be interesting to hear Gavin Schmidt on this, in view of the models-obs discrepancy graphed above.

Who is “Slate” and why should I care? Other than that omission, a bloody good article Mr. T.

Thanks, Mike. Slate is a long-term climate alarmist website. Not worth much, but articles like this need some push-back.You’re right, I should take a sentence to say why I oppose them. Cheers.